DirectX 10 and Microsoft’s Windows Vista operating system have been looming on the technological horizon for some time now. Although the two aren’t going to be available until next year, many hardware enthusiasts have decided to save up in readiness for an upgrade blitz in the run up to the Vista launch date at the end of January. The two software packages go together and, because of a number of changes that Microsoft has made to the way the software interacts with the hardware, the DirectX 10 libraries are not going to be available for Windows XP.

Today signifies the launch of the world’s first DirectX 10 compliant video card. NVIDIA’s GeForce 8-series has been in development since 2002 and the flagship GeForce 8800 GTX – previously known under the guise of G80 – represents one of the most complex pieces of silicon ever produced.

Since this is a mammoth article designed to expose not only the graphics card, but the evolution of its design and the interaction with DirectX 10, here is a handy index to break-down of the bit-tech coverage:

Let’s face it, most people – including myself until recently – were expecting NVIDIA to implement DirectX 10 in a rather messy way. Many were expecting separate vertex shaders, pixel shaders and geometry shaders (new to DirectX 10); however, NVIDIA has gone against popular opinion and has built its GeForce 8800 GPU with unification and massive parallelism in mind from the outset.

The result of this design decision is that NVIDIA’s flagship GeForce 8800 GTX comes complete with a dizzying 128 versatile shader processors capable of being dynamically allocated to vertex, pixel, geometry or physics operations. The architecture is designed with efficiency in mind - the fact that each of the 128 stream processors (as NVIDIA terms them) is capable of handling any shader instruction means that the silicon is rarely idle. But before we delve a little deeper into NVIDIA’s GeForce 8800 architecture, let’s have a look at the hardware.

The card itself is absolutely massive: we're talking 7900 GX2 length here - it's just over 27cm in length, so it's not going to fit in every case. The cooler is obviously dual-slot, and has a very odd fan design, with fins around the outside of the of the unit rather than spoking out from the middle. The great thing is that while it looks odd, it's incredibly good at it's job - this is one of the quietest coolers we've ever come across.

You'll immediately notice the dual SLI connectors at the top of the card, designed for connecting multiple cards together. NVIDIA hasn't been specific about the use of the second SLI connector, but we believe it's related to the fact that the nForce 680i reference board has three PCI-Express x16 slots. We think it's either going to be used for SLI Physics, or for three-way SLI at some point in the future.

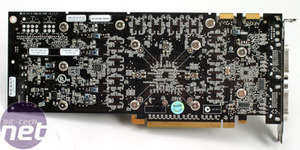

The first partner boards out of the gate will all be following this reference design - NVIDIA has had Flextronics make them for partners, who will add their own stickers to the cooler and box them up in their own manner. Other notable things on the board are the dual PCI-Express power connectors - a return to the days of the 6800 Ultra, which also desired double the amount of power considered 'normal'. The extra power circuitry required to regulate the dual power input is partly responsible for the extra length of the board.

Today signifies the launch of the world’s first DirectX 10 compliant video card. NVIDIA’s GeForce 8-series has been in development since 2002 and the flagship GeForce 8800 GTX – previously known under the guise of G80 – represents one of the most complex pieces of silicon ever produced.

Since this is a mammoth article designed to expose not only the graphics card, but the evolution of its design and the interaction with DirectX 10, here is a handy index to break-down of the bit-tech coverage:

- 1-2: Introduction and physical card

- 3-5: Architecture considerations for DX10

- 6-10: 8800 architecture, changes and image quality revisions

- 11-17: Real-world testing and Benchmarks

- 18-19: Power consumption and Conclusions

Who ever thought it’d be unified?

We have known for some time that Microsoft’s DirectX 10 API would be based around a unified programming model, but NVIDIA’s direction for GeForce 8 has been anything but clear. Nobody could say for definite whether the architecture was going to follow a traditional GPU design – vertex and texture units as we’re used to seeing – or a unified shader architecture like the one designed by ATI for Microsoft’s Xbox 360.Let’s face it, most people – including myself until recently – were expecting NVIDIA to implement DirectX 10 in a rather messy way. Many were expecting separate vertex shaders, pixel shaders and geometry shaders (new to DirectX 10); however, NVIDIA has gone against popular opinion and has built its GeForce 8800 GPU with unification and massive parallelism in mind from the outset.

The result of this design decision is that NVIDIA’s flagship GeForce 8800 GTX comes complete with a dizzying 128 versatile shader processors capable of being dynamically allocated to vertex, pixel, geometry or physics operations. The architecture is designed with efficiency in mind - the fact that each of the 128 stream processors (as NVIDIA terms them) is capable of handling any shader instruction means that the silicon is rarely idle. But before we delve a little deeper into NVIDIA’s GeForce 8800 architecture, let’s have a look at the hardware.

GeForce 8800 GTX reference card:

The clock speeds work a bit differently on GeForce 8-series - NVIDIA has moved away from a traditional clock speed and there are now three notable clocks in the architecture. The core clock is 575MHz - you're probably familiar with a traditional core clock - this represents the frequency of the pixel output engines (also known as ROPs). The 128 shader processors are clocked at an incredible 1.35GHz by default and finally the memory clock is set at 1.8GHz (effective).The card itself is absolutely massive: we're talking 7900 GX2 length here - it's just over 27cm in length, so it's not going to fit in every case. The cooler is obviously dual-slot, and has a very odd fan design, with fins around the outside of the of the unit rather than spoking out from the middle. The great thing is that while it looks odd, it's incredibly good at it's job - this is one of the quietest coolers we've ever come across.

You'll immediately notice the dual SLI connectors at the top of the card, designed for connecting multiple cards together. NVIDIA hasn't been specific about the use of the second SLI connector, but we believe it's related to the fact that the nForce 680i reference board has three PCI-Express x16 slots. We think it's either going to be used for SLI Physics, or for three-way SLI at some point in the future.

The first partner boards out of the gate will all be following this reference design - NVIDIA has had Flextronics make them for partners, who will add their own stickers to the cooler and box them up in their own manner. Other notable things on the board are the dual PCI-Express power connectors - a return to the days of the 6800 Ultra, which also desired double the amount of power considered 'normal'. The extra power circuitry required to regulate the dual power input is partly responsible for the extra length of the board.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.